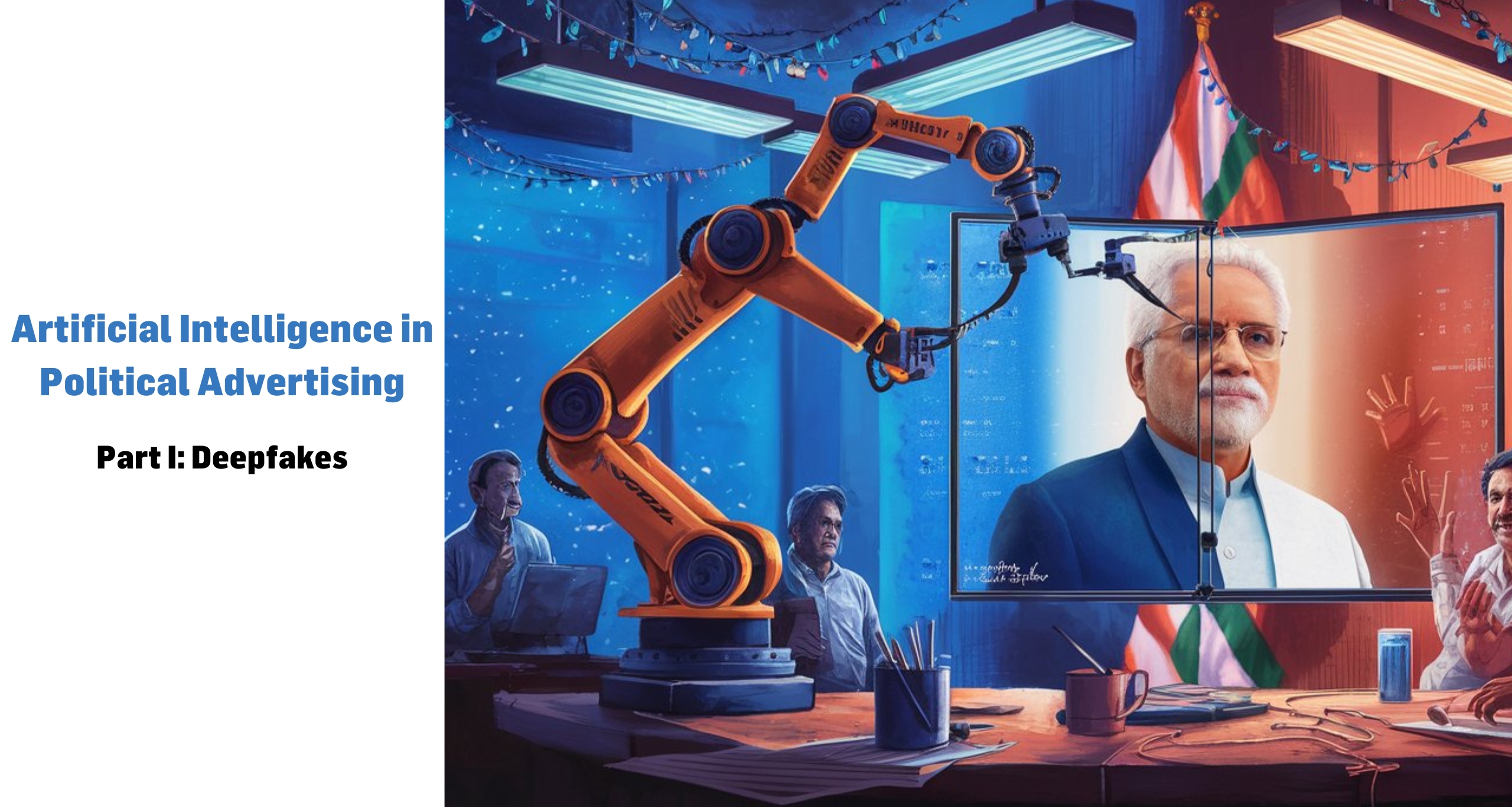

Artificial Intelligence in Political Advertising Part I: Deepfakes

The rise of artificial intelligence (AI) and its deployment of deepfake technology has left an indelible mark on India’s electoral fabric. Deepfakes, crafted through AI to manipulate media, present a grave threat to democratic processes, especially during the electoral cycle.

The advent of deepfakes has ushered in an era where doctored videos and audio snippets can portray political figures uttering statements they never made or engaging in actions they never undertook. This technology can potentially sway voter sentiments, distort reality, and corrode public faith in our electoral machinery.

The influence of AI and deepfake technology is palpable not just within our borders but across the globe. In India, the misuse of deepfakes to peddle false narratives about candidates, misrepresent critical stances, and even manufacture fraudulent evidence strikes at the very heart of our electoral integrity.

Take, for instance, the AI-generated rendition of Prime Minister Narendra Modi circulating on WhatsApp, showcasing the potential for hyper-personalized outreach in a nation of nearly a billion voters. Addressing voters by name in a demo video of uncertain origin, this avatar exemplifies the power AI wields in shaping electoral narratives.

Similarly, a viral video depicting Bollywood icon Aamir Khan ridiculing the ruling BJP for unfulfilled promises, with a suspiciously similar voice urging support for the opposition, underscores the ease with which deepfakes can mislead the electorate. Khan promptly denounced the video as a sham, revealing it to be yet another instance of AI-driven deception.

The ramifications of illicit deepfake deployment in Indian elections cannot be overstated. Such acts not only imperil the fairness and transparency of our democratic process but also jeopardize public trust in electoral outcomes. The fabrication of false evidence through AI poses a serious threat to informed decision-making in a democratic society.

Urgent steps are essential to counter the risks posed by deepfakes in our electoral discourse. Public education and awareness campaigns are vital to empower voters with the discernment needed to navigate AI-generated deception. Equally crucial are robust legislative measures aimed at curbing the insidious use of deepfakes in political campaigns.

Mitigation Strategies for Deepfake Risks in Indian Elections:

- Public Education: Increase awareness among voters about the existence of deepfakes and provide guidance on how to identify and handle deceptive content.

- Regulatory Framework: Implement stringent regulations governing the creation and dissemination of deepfakes in political campaigns, with penalties for violators.

- Technical Solutions: Invest in AI-powered tools that can detect and counter deepfakes, ensuring the authenticity of political content.

- Collaboration: Foster partnerships between government agencies, technology companies, and civil society to collectively combat the misuse of deepfake technology in elections.

- Transparency Measures: Political entities must provide disclaimers on AI-generated content in campaign materials to enhance transparency and accountability.

- International Cooperation: Engage in collaborations with global partners to exchange best practices and strategies for addressing the challenges posed by deepfakes in electoral processes.

In navigating this formidable challenge, India must tread cautiously, leveraging technology’s benefits while staunchly guarding against its misuse to safeguard the very essence of our democratic ethos. Follow Sanket Communications for more insights into the use of AI in elections.